The follow up article: keeping abreast of the field – Interview with Paul Pavlidis and Jesse Gillis

| 6 November, 2013 | Michael Markie |

|

|

Last week we announced our three distinct amendment options for F1000Research articles. One of the options is a ‘follow-up’ article, which has been designed for authors who have published a paper that would benefit from regular additions to ensure that the content is both current and relevant to its readers.

The need for this type of article became apparent whilst working with F1000Research authors Paul Pavlidis (Centre for High-Throughput Biology, University of British Columbia) and Jesse Gillis (Cold Spring Harbor Laboratory). Working in a dynamic field of science and keen to document the latest research as it became available, they introduced the idea of creating a ‘living article’ where any developments since their initial publication could be updated annually. With our unique publication model, we were able to facilitate such an article whereby a new individually citable publication is created and is clearly linked to the previous article(s).

Here, you can access the article Progress and challenges in the computational prediction of gene function using networks: 2012-2013 update recently published by Paul and Jesse. In the interview below, they explain their research in more detail and describe how F1000Research has helped them keep abreast of the recent developments in the field.

You published your first opinion piece on the progress and challenges in computational prediction of gene function last year; can you explain why you wrote it?

Over the last few years, we’ve been studying limitations in the use of gene networks. While our publications document different issues, they also reflect a bigger picture which none of our papers expressed very clearly. Our opinion piece was an attempt to articulate our synthesis of those ideas.

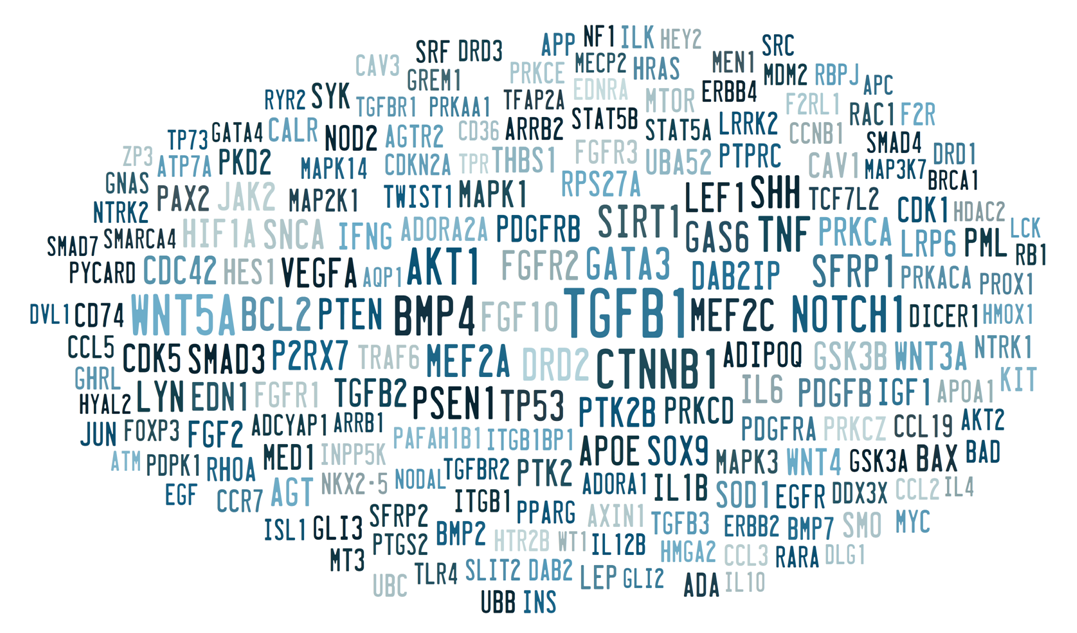

The original research behind the article was inspired by the interest we and many others had in using existing data to find novel features characterizing gene function. Many of us had claimed some degree of success in doing this with computational methods. Whether explicitly or not, most methods rely on the idea of “guilt by association”, which means inductively reasoning that a gene possesses some function based on its similarity (in some sense) to other genes possessing that function. These similarities are represented as networks (of interaction or similarity such as co-expression).

Biologists can sometimes infer that a gene has a given function by looking closely at the data, but we came to appreciate that simply automating that reasoning hasn’t had the impact one would hope for. The computational methods have tended not to generalize well, so there are still lots poorly-characterized genes. Consequently, researchers in our field continue to think there’s room for improvement and spend a lot of effort developing new methods. We took a step back to try to figure out what was going on.

What we documented was that a lot of the performance of the function inference methods relies on either generic observations like “P53 is important” or features that don’t generalize well to new data. At the time we discussed this work with other researchers quite a bit. It turns out there’s widespread recognition that the approaches don’t work quite as well as expected, but the reasons people gave as explanations implied only modest problems. The commentary was our attempt to build some consensus around the specific and centrally important problems which need to be addressed.

Why did you decide it would be relevant to document the progress and challenges annually?

Genetics is fast moving and network learning of gene function is moving along with it. There’s a lot of work each year and a living review seems a great way to keep track of progress. It’s particularly important to us because we want to see the major problems in the field addressed. A continuous commentary seems a useful way of keeping other researchers from re-inventing the wheel with superficial results that suffer from hidden defects. We also want to bring attention to areas of progress where we see it.

How does F1000Research’s publication model help you continuously document your findings?

We heard about F1000Research just as we were considering writing our first article in 2012. It seemed an ideal way to get the ideas out there promptly, with updates, and have some degree of conversation with the field as a whole. Certainly, any conventional publication format wouldn’t really have been suitable (in terms of having a continuous series kept quite close to up-to-date and easy to read through sequentially). We hope to continue updating yearly.

How have you found publishing your work in F1000Research: would you recommend it to your colleagues?

Publishing at F1000Research has been a pleasure. Almost unimaginably fast by any standard (days) and just about ideal for our purposes. We have recommended it to our colleagues and will continue to do so, both for publication and as a place to read valuable material (often particularly in the reviewer comments).

|